Legacy systems, once the bedrock of business operations, often become technological anchors in a high-velocity market. Their rigid architectures, high maintenance costs, and incompatibility with modern tools can stifle innovation, slow down delivery cycles, and create significant security vulnerabilities. While the 'rip and replace' fantasy is tempting, it's rarely practical or affordable. The real challenge lies in choosing the right strategy to incrementally dismantle risk and build value. This is where a clear understanding of legacy system modernization approaches becomes a critical business advantage.

Instead of a one-size-fits-all solution, a successful modernization journey requires a tailored plan. The correct approach depends on your specific system's architecture, business objectives, risk tolerance, and budget. For instance, migrating a mainframe application to a cloud infrastructure demands a different strategy than exposing its core functions through APIs for a new mobile application. This guide is designed to cut through the complexity and provide a clear, actionable roadmap.

We will dissect eight distinct and proven modernization strategies, moving beyond high-level theory to offer practical implementation details. For each approach, you will find:

- A clear definition of the strategy and its core principles.

- Actionable pros and cons to weigh against your business needs.

- Best-fit scenarios to help you identify when to apply a specific method.

- Practical examples, such as using the Strangler Fig pattern to incrementally replace components of a monolithic e-commerce platform or containerizing a critical backend service to run on Kubernetes.

This comprehensive roundup will equip you with the knowledge to make informed decisions, transforming your technology stack from a liability into a powerful engine for agility and sustainable growth.

1. Replatforming (Lift and Shift): The Quick-Win Migration

Replatforming, often called "lift and shift," is one of the most direct legacy system modernization approaches. It involves moving an application from its current environment, such as an on-premise data center, to a new infrastructure, like a public or private cloud, with minimal changes to the core code and architecture. The primary goal is to quickly leverage the benefits of the new platform, such as improved scalability, reliability, and reduced operational overhead, without the time and expense of a complete overhaul.

Think of it as moving your application from an old house into a new one. The furniture (your code and data) stays the same, but the new house (the cloud platform) offers better plumbing, electricity, and security. A practical example would be a retail company moving its on-premise Oracle e-commerce database to a managed service like Amazon RDS for Oracle. The database software remains the same, but the operational burden of hardware maintenance, backups, and patching is shifted to the cloud provider.

When to Use Replatforming

This approach is ideal for organizations seeking immediate infrastructure cost savings and operational efficiencies. It's a pragmatic first step for companies starting their cloud journey, allowing them to exit costly data centers quickly. Replatforming is also a strong choice for applications that are stable and perform well but are hampered by aging hardware.

A classic example is Netflix's migration to AWS. Faced with massive scaling challenges in its own data centers, Netflix moved its services to AWS. This allowed the company to handle its explosive growth and achieve global reach, initially by replatforming its existing services before gradually re-architecting them into microservices. Similarly, General Electric migrated hundreds of its industrial applications to Microsoft Azure to streamline operations and tap into cloud analytics capabilities with a faster time-to-market than a full refactor would have allowed.

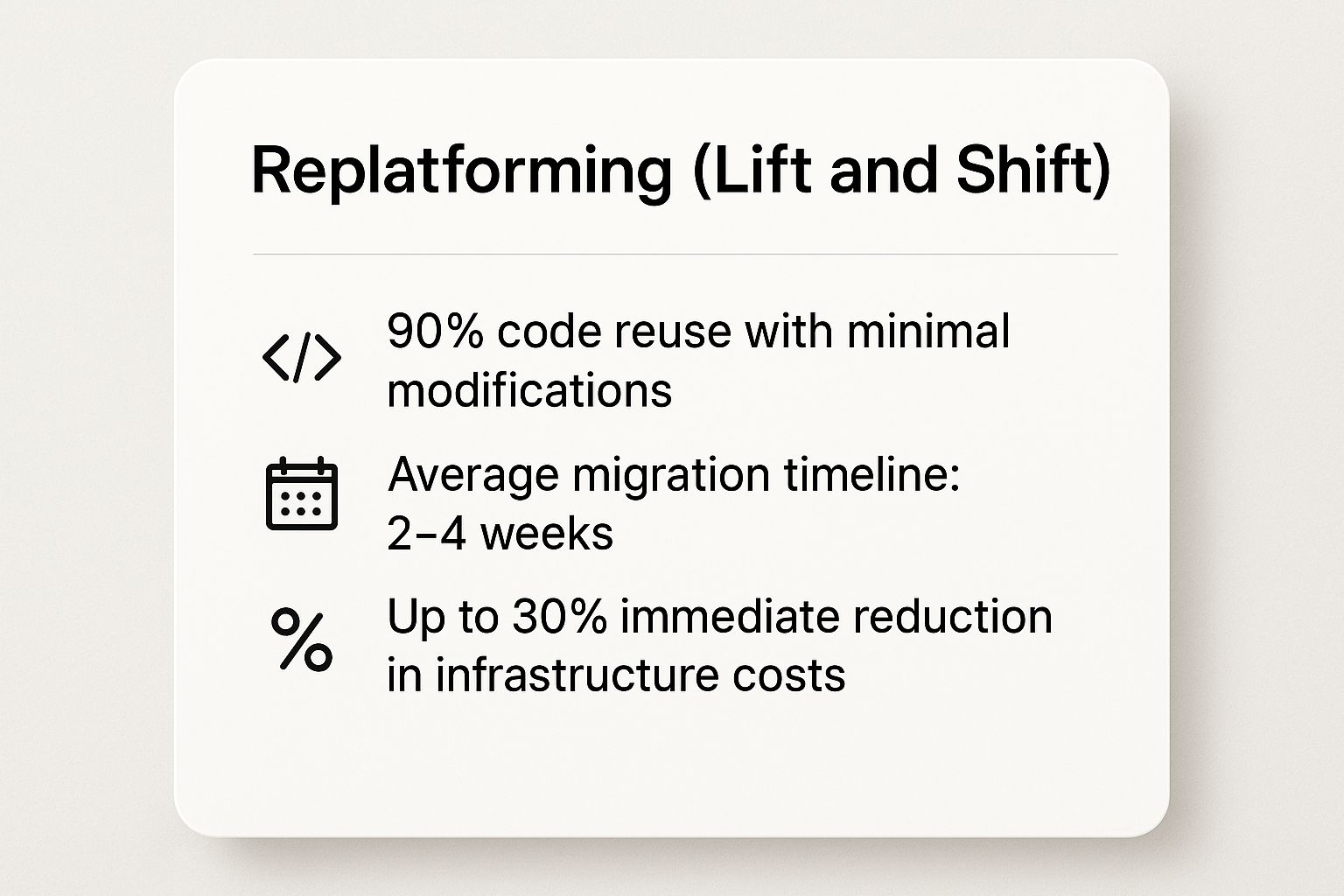

The infographic below highlights key metrics that make replatforming an attractive option for many businesses.

These figures demonstrate why replatforming is considered a "quick-win," offering significant cost reduction and a rapid migration timeline with minimal code disruption.

Actionable Implementation Tips

To ensure a smooth transition, focus on meticulous planning and execution.

- Conduct Thorough Dependency Mapping: Before migrating, map out all application dependencies, including databases, external APIs, and network connections. Unforeseen dependencies are a common cause of migration failures.

- Start with a Proof of Concept (PoC): Choose a non-critical application for an initial migration. This PoC helps your team build experience, identify potential roadblocks, and refine the migration process before tackling business-critical systems.

- Optimize Post-Migration: Replatforming is often the beginning, not the end. Once the application is stable on the new platform, plan for gradual optimization. This could involve right-sizing cloud instances, adopting managed database services, or containerizing parts of the application to further enhance performance and reduce costs.

2. Refactoring (Code Optimization): Enhancing from Within

Refactoring is a disciplined technique for restructuring existing computer code, altering its internal structure without changing its external behavior. As one of the core legacy system modernization approaches, it focuses on improving non-functional attributes like readability, maintainability, and performance. The primary goal is to pay down technical debt, making the codebase cleaner and more efficient without disrupting the system's core functionality.

Think of it as renovating a structurally sound house. You aren't moving or rebuilding it; instead, you're rewiring the electricity, updating the plumbing, and reorganizing the layout for better flow. A practical example is a development team taking a single, 2,000-line function that handles order processing and breaking it into smaller, more manageable functions like validateOrder(), calculateTaxes(), and updateInventory(). The application's checkout feature remains identical to the end-user, but the code becomes significantly easier for developers to test, debug, and extend.

When to Use Refactoring

This approach is ideal for business-critical applications that are functionally sound but suffer from high technical debt, making them difficult and risky to update. It is a perfect strategy when the underlying architecture is still viable, but the code itself has become convoluted over years of quick fixes and additions. Refactoring allows you to improve the long-term health of your software and increase developer velocity without the high cost and risk of a full rewrite.

A prime example is Spotify's continuous refactoring of its music streaming backend. To support rapid feature development and massive scale, its engineering teams constantly refactor services to improve performance and maintainability. Similarly, Slack has iteratively refactored its real-time messaging systems to handle immense concurrent user loads, optimizing code paths and simplifying complex logic to ensure a responsive user experience. These companies use refactoring to evolve their systems from within.

Actionable Implementation Tips

To ensure refactoring adds value without introducing new bugs, a methodical and disciplined process is essential.

- Implement Comprehensive Automated Testing: Before changing a single line of code, ensure you have a robust suite of automated tests. These tests act as a safety net, verifying that the system's external behavior remains unchanged after each refactoring step.

- Use Code Analysis Tools: Leverage static and dynamic code analysis tools to identify "code smells," performance bottlenecks, and overly complex sections. These tools provide a data-driven roadmap for prioritizing your refactoring efforts.

- Refactor in Small, Incremental Changes: Avoid large, sweeping changes. The core principle of safe refactoring, popularized by experts like Martin Fowler, is to make small, verifiable changes. Commit these changes frequently to isolate any potential issues and make rollbacks easier.

3. Re-architecting (Rebuild): The Transformative Overhaul

Re-architecting is the most intensive of the legacy system modernization approaches, involving a complete redesign of the application's architecture. Instead of just moving or tweaking the system, this strategy fundamentally alters its structure to align with modern principles like microservices, serverless computing, or event-driven models. The primary goal is to eliminate deep-rooted technical debt, improve agility, and unlock new business capabilities that are impossible to achieve with the existing monolithic design.

This approach is like demolishing an old, structurally unsound building and constructing a new, modern skyscraper in its place. While the purpose remains the same, the foundation, materials, and design are entirely new, built for future growth and resilience. A practical example is an airline rebuilding its monolithic booking system, which handles flights, hotels, and car rentals in one massive codebase, into a set of independent microservices: a 'Flight Service', a 'Hotel Service', and a 'Payment Service', each communicating through well-defined APIs. This allows the flight team to update their service without affecting hotel bookings.

When to Use Re-architecting

Re-architecting is the ideal strategy when the legacy system's core architecture is the primary bottleneck preventing business growth, innovation, or scalability. It is best suited for mission-critical applications where incremental changes are insufficient and a strategic transformation is required= to stay competitive. If an application is too complex to maintain, too slow to update, or cannot support modern business processes, a rebuild is often the only viable long-term solution.

Amazon's famed transition from a monolithic architecture to microservices is a landmark example. As the company grew, its two-tiered monolith became a major impediment to development speed. By re-architecting its platform into hundreds of autonomous services, Amazon enabled its teams to innovate and deploy independently, drastically accelerating feature delivery. Similarly, Uber rebuilt its initial platform to handle the complexities of global scaling, separating concerns like payments, trip management, and driver notifications into distinct services.

Actionable Implementation Tips

A successful rebuild requires deep strategic planning and a phased approach to manage complexity and risk.

- Start with a Comprehensive Architecture Assessment: Before writing any new code, conduct a thorough analysis of the existing system. Identify business capabilities, data flows, and pain points. This "domain-driven design" approach helps you define the boundaries for your new microservices or components.

- Design for Observability from Day One: Distributed systems are inherently more complex to monitor. Integrate logging, metrics, and tracing tools into your new architecture from the very beginning. This ensures you have visibility into system performance and can troubleshoot issues effectively post-launch.

- Implement a Strangler Fig Pattern: Avoid a "big bang" rewrite. Instead, gradually replace pieces of the legacy monolith with new services. Route traffic to the new service, and once it is stable, decommission the old component. This incremental approach minimizes risk and delivers value faster.

- Plan for Data Migration and Synchronization: Develop a clear strategy for migrating data from the legacy database to the new architecture. This may involve a one-time migration, or more commonly, a period of data synchronization between the old and new systems while both are running in parallel.

4. Strangler Fig Pattern: The Gradual and Safe Replacement

The Strangler Fig pattern is a highly strategic approach to legacy system modernization, involving the incremental replacement of a legacy system's functionality. Coined by Martin Fowler, this method builds new applications and services around the old system, gradually intercepting and rerouting requests until the original system is "strangled" and can be safely decommissioned. This avoids the high risk associated with a "big bang" rewrite by enabling a phased, controlled transition.

Imagine a large, old tree (your legacy system) that is still functional but difficult to maintain. A strangler fig vine starts growing on its trunk, initially relying on the tree for support. Over time, the vine grows stronger, develops its own root system, and eventually envelops the old tree entirely. A practical example is an online retailer wanting to modernize its checkout process. They build a new microservice for payment processing. An API gateway is set up to route all website traffic. Initially, it passes all requests to the old monolith. Then, it's configured to route just the /api/payment calls to the new service, while all other requests (product search, user profiles) still go to the monolith. Over time, more services are built and routed, until the monolith handles no traffic.

When to Use the Strangler Fig Pattern

This approach is ideal for large, complex, and business-critical systems where downtime is unacceptable and a full rewrite is too risky or expensive. It’s a powerful strategy when you want to migrate to a new architecture, like microservices, while continuing to deliver business value without interruption. The pattern allows teams to learn and adapt as they go, reducing the risk of a failed modernization project.

A prominent example is Spotify's journey from a monolithic backend to a microservices architecture. Instead of rewriting everything at once, they identified functional areas and built new, independent services to handle them. These new services were deployed alongside the monolith, gradually taking over traffic and responsibilities. Similarly, GitHub used this pattern to replace its aging MySQL infrastructure, building a new system to run in parallel and slowly migrating data and operations without impacting user experience.

Actionable Implementation Tips

A successful strangler implementation requires careful planning and a disciplined, incremental approach.

- Identify Clear Boundaries: Start by analyzing the legacy system to identify logical, self-contained components or domains that can be "strangled" first. These boundaries will define your new microservices. A good starting point is often a feature that requires frequent updates or new development.

- Implement a Facade: Introduce an intermediary layer, often an API gateway or proxy, that sits in front of the legacy system. This "facade" is crucial as it will intercept incoming requests and route them to either the legacy code or the new service, making the transition seamless to end-users.

- Plan Data Synchronization Carefully: As you build new services, you must manage how data is shared between the new and old systems. Decide on a strategy early, whether it's synchronous writes, event-based synchronization, or using a shared database temporarily, to ensure data consistency throughout the migration.

5. API-First Modernization: Unlocking Legacy Data and Functionality

API-First Modernization is a strategic approach that prioritizes exposing the data and business logic of a legacy system through modern, secure, and well-documented APIs (Application Programming Interfaces). Instead of overhauling the entire system at once, this method creates a stable interface layer that allows modern applications, microservices, and external partners to interact with the legacy core. The underlying system can then be modernized or replaced incrementally behind the API facade, minimizing disruption to business operations.

Think of this approach as installing a modern, universal translator for an old, powerful machine that speaks a proprietary language. The machine (your legacy system) continues to perform its critical functions, but the translator (the API layer) allows new devices and users to communicate with it using a standard, easily understood protocol. A practical example is a university that has a 30-year-old student information system (SIS). Instead of replacing it, the IT team builds a RESTful API layer that exposes endpoints like /students/{id}/grades and /courses/{code}/enrollment. This allows them to build a modern mobile app for students to check grades without ever touching the legacy COBOL code of the SIS.

When to Use API-First Modernization

This method is ideal for systems that contain valuable business logic and data but are difficult to integrate with modern digital ecosystems. It is a powerful strategy when you need to enable new digital channels, like mobile apps or partner integrations, that rely on access to legacy data. This is one of the most flexible legacy system modernization approaches because it preserves core functionality while fostering new development.

A prime example is the financial services industry. Bank of America exposed functionalities from its core banking systems through APIs, allowing it to build a modern mobile banking app and integrate with fintech partners without replacing its decades-old mainframes. Similarly, Ford's connected vehicle platform uses APIs to allow third-party developers to create apps that interact with vehicle data, effectively turning the car into a platform for innovation while the core vehicle systems remain unchanged. You can explore how HTTP protocols form the backbone of these modern APIs to understand their implementation better. Learn more about APIs and their HTTP communication on findmcpservers.com.

Actionable Implementation Tips

A successful API-first strategy hinges on thoughtful design and robust management.

- Design APIs with Versioning in Mind: Legacy systems will evolve, and so will your APIs. Implement a clear versioning strategy (e.g.,

/api/v2/) from the start to introduce changes without breaking existing integrations for consumers. - Implement an API Gateway: Use an API gateway from a provider like Kong, MuleSoft, or Amazon API Gateway. This centralizes critical functions like security (authentication and authorization), rate limiting, and traffic monitoring, decoupling these concerns from the legacy system itself.

- Document APIs Thoroughly: Create comprehensive, interactive documentation using standards like OpenAPI (Swagger). Good documentation is crucial for driving adoption among internal and external developers, accelerating the development of new applications that consume your legacy data.

6. Database Modernization: Powering Modern Analytics and Performance

Database modernization is a focused legacy system modernization approach that targets the core of many enterprise applications: the data layer. It involves migrating legacy databases (like on-premise Oracle or SQL Server instances) to modern platforms, which could be cloud-native databases, data warehouses, or data lakes. The goal is to unlock data, improve performance and scalability, and enable advanced analytics capabilities that older systems simply cannot support.

This approach is like upgrading the entire library and cataloging system of a historic building. You're not just moving the books (data); you're reorganizing them into a more accessible, searchable, and scalable system. A practical example is a media company migrating its user activity logs from a traditional on-premise SQL Server to a cloud data warehouse like Google BigQuery. This move allows their data science team to run complex queries to analyze user behavior and generate personalized content recommendations—a task that would have been too slow and resource-intensive on the original database.

When to Use Database Modernization

This strategy is essential for organizations whose growth and innovation are bottlenecked by data-related issues. If your legacy database suffers from poor performance, high licensing costs, scalability limits, or an inability to support modern analytics and AI/ML workloads, modernization is the answer. It's particularly critical for data-driven companies aiming to leverage business intelligence and predictive analytics for a competitive edge.

A prime example is Airbnb's transition to Amazon Redshift. As the company grew, its existing data infrastructure couldn't handle the scale of analytical queries needed for business insights. Migrating to a modern cloud data warehouse enabled them to analyze massive datasets quickly, optimizing everything from pricing to user recommendations. Similarly, Pinterest migrated its self-managed MySQL databases to Amazon RDS and Aurora to improve scalability and reliability, freeing up engineering resources to focus on product innovation rather than database administration.

Actionable Implementation Tips

A successful database modernization requires careful planning to protect data integrity and minimize service disruption.

- Perform a Thorough Data Quality Assessment: Before migration, profile your existing data to identify inconsistencies, duplicates, and inaccuracies. Migrating "dirty" data to a new system will only amplify existing problems. Use data quality tools to clean and standardize data pre-migration.

- Implement Robust Backup and Recovery Procedures: The new database environment must have a bulletproof backup and recovery plan. Test this plan rigorously before going live to ensure you can restore data quickly and reliably in case of failure. This is non-negotiable for business-critical data.

- Plan for Minimal Downtime: Use migration strategies like "trickle" or parallel-run migrations where possible. For instance, you can set up data replication between the old and new databases, allowing you to switch over with near-zero downtime once the new system is fully synced and tested. You can find more strategies when you explore the options for modern database servers.

7. Containerization and Orchestration: The Operational Overhaul

Containerization is a powerful legacy system modernization approach that involves encapsulating an application and its dependencies into a standardized, portable unit called a container. This method, popularized by tools like Docker, allows legacy software to run consistently across different computing environments. When combined with an orchestration platform like Kubernetes, it modernizes not just the application's packaging but its entire deployment, scaling, and management lifecycle without requiring extensive code changes.

Think of it as placing your entire application, along with its specific operating system libraries and configurations, into a self-contained, lightweight box. This box can be moved and run anywhere, from a developer's laptop to on-premise servers or the cloud, eliminating the classic "it works on my machine" problem. A practical example is a company with a monolithic Python 2.7 application that relies on an old version of Linux. They can create a Docker container that bundles the application code, the Python 2.7 runtime, and all specific OS libraries. This container can then be deployed on a modern Kubernetes cluster, simplifying deployment and allowing it to run alongside newer applications without conflicts.

When to Use Containerization and Orchestration

This approach is ideal for organizations looking to improve deployment velocity, automate scaling, and enhance infrastructure efficiency without embarking on a full-scale refactoring project. It is particularly effective for applications that are difficult to install or have complex dependencies. By containerizing, you abstract away the underlying infrastructure, paving the way for a cloud-native or hybrid-cloud strategy.

A prime example is Spotify, which migrated its infrastructure to Kubernetes to manage its vast microservices architecture. This move allowed its engineering teams to deploy services independently and scale them efficiently to serve millions of users. Similarly, ING Bank adopted a container platform to modernize its development pipeline, accelerating the delivery of new features and improving the resilience of its banking applications.

Actionable Implementation Tips

A successful containerization strategy requires a focus on both packaging the application and managing it effectively at scale.

- Start with Stateless Applications: Begin your containerization journey with stateless applications. These services are simpler to manage in an orchestrated environment because they don't retain session data, making them easy to scale, restart, and replace without data loss.

- Implement Robust Security Scanning: Container images can contain vulnerabilities. Integrate automated security scanning tools into your CI/CD pipeline to scan images for known security issues before they are deployed to production. To get started with creating secure and efficient containers, you can learn more about crafting a Dockerfile on findmcpservers.com.

- Invest in Monitoring and Observability: Orchestrated environments are dynamic and complex. Implement comprehensive monitoring, logging, and tracing tools to gain deep visibility into container performance, resource consumption, and application health, allowing you to troubleshoot issues quickly.

8. Hybrid Integration Platform: The Interoperability Bridge

The Hybrid Integration Platform (HIP) approach focuses on creating a unified, middleware-based integration layer that connects legacy systems with modern applications and cloud services. Instead of replacing the legacy core immediately, this strategy builds a bridge, enabling seamless communication and data flow between old and new worlds. This allows for gradual modernization while ensuring business continuity and system interoperability.

Think of it as building a central train station for your IT landscape. The old, local train lines (your legacy systems) can now connect with new high-speed rail networks (cloud services and modern apps). Passengers and cargo (data and processes) can transfer smoothly between them without needing to rebuild the entire railway from scratch. A practical example is a manufacturing company using a HIP like MuleSoft Anypoint Platform to connect its on-premise SAP ERP system with a cloud-based Salesforce CRM. When a new sales order is created in Salesforce, the HIP automatically triggers a process to create the corresponding order in the legacy ERP, ensuring data is synchronized across both systems without manual intervention.

When to Use a Hybrid Integration Platform

This approach is ideal for organizations where legacy systems are too critical or complex to replace outright but must interact with modern tools. It’s a powerful strategy for enterprises with a mix of on-premise and multi-cloud environments that need to maintain data consistency and streamline business processes across disparate systems. A HIP is also invaluable for companies looking to expose legacy data and functionality as modern APIs, fostering innovation and creating new business channels.

A prime example is Deutsche Bank, which utilized an integration platform to modernize its trade finance operations. By connecting legacy mainframe systems with new digital front-ends, the bank accelerated transaction processing and improved customer experience without a risky "big bang" migration. Similarly, BMW leverages a HIP to integrate its core manufacturing systems with IoT devices on the factory floor and cloud-based analytics, optimizing production in real-time.

Actionable Implementation Tips

A successful HIP implementation hinges on robust design and governance.

- Design for High Availability and Fault Tolerance: Since the HIP becomes a critical backbone for your operations, build in redundancy and automatic failover mechanisms. Ensure that a failure in one connected system or the platform itself does not bring down the entire ecosystem.

- Implement Comprehensive Monitoring and Alerting: Use centralized tools to monitor API calls, data flows, and system performance across the platform. Set up proactive alerts to detect anomalies, latency issues, or integration failures before they impact business processes.

- Plan for Message Queuing and Error Handling: Implement message queues to manage data flow and prevent legacy systems from being overwhelmed by requests from faster, modern applications. A robust error-handling strategy is crucial for retrying failed transactions and ensuring data integrity.

Legacy System Modernization Approaches Comparison

| Modernization Approach | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Replatforming (Lift and Shift) | Low to moderate | Moderate (mainly infrastructure) | Fast migration, infrastructure upgrade | Quick cloud migration, minimal code change | Fastest modernization, lower initial cost |

| Refactoring (Code Optimization) | Moderate to high | Skilled developers, testing | Improved code quality and performance | Code maintainability, debt reduction | Better maintainability, reduced technical debt |

| Re-architecting (Rebuild) | High | Extensive planning and skilled teams | Scalable, flexible modern architecture | Major scalability/performance needs | Maximum scalability, future-proof architecture |

| Strangler Fig Pattern | Moderate | Careful planning, integration | Incremental modernization, zero downtime | Gradual migration without disruption | Minimizes disruption, continuous operation |

| API-First Modernization | Moderate | API design, security expertise | Modern integration, interoperability | Systems needing API exposure and modernization | Enables gradual modernization, preserves logic |

| Database Modernization | Moderate to high | Data migration and DBA skills | Enhanced performance, analytics | Legacy databases requiring upgrade | Improved scalability and analytics |

| Containerization and Orchestration | Moderate to high | Container/orchestration skills | Portable, scalable deployment | Portability, scalable deployment needs | Enhanced portability, resource efficiency |

| Hybrid Integration Platform | Moderate | Integration expertise | Unified hybrid system interoperability | Connecting legacy with cloud/modern apps | Preserves investments, reduces integration complexity |

Choosing Your Path: A Strategic Conclusion

The journey through the various legacy system modernization approaches reveals a fundamental truth: there is no single "best" method. The optimal path is not a pre-defined route but a custom-built strategy tailored to your organization's unique landscape. We've explored a spectrum of options, from the relatively straightforward Replatforming (Lift and Shift) to the deeply transformative process of Re-architecting from the ground up. Each approach offers a distinct balance of speed, cost, risk, and long-term value.

Your decision hinges on a clear-eyed assessment of your current state and future ambitions. A rapid, cost-effective cloud migration might point towards Replatforming, while a system plagued by deep-rooted technical debt and performance bottlenecks may demand the more intensive work of Refactoring or a full Re-architecture. For mission-critical systems where downtime is not an option, the Strangler Fig Pattern provides an elegant, risk-averse pathway, incrementally replacing old functionality with new services without a disruptive "big bang" cutover.

Synthesizing Your Modernization Strategy

The most powerful strategies often blend several of these legacy system modernization approaches. You might begin with an API-First Modernization to quickly expose valuable data from a monolithic mainframe, generating immediate business value while you plan a more comprehensive Database Modernization project. Simultaneously, you could use Containerization to encapsulate smaller, less complex legacy applications, making them more portable and scalable as a precursor to a larger cloud-native rebuild.

Consider a scenario where a financial institution relies on a legacy core banking system. They could:

- Start with API-First: Create a secure API layer around the legacy system to enable new mobile banking features, satisfying immediate market demands.

- Apply the Strangler Fig Pattern: Begin building new microservices for specific functions like loan origination, routing traffic to the new service while the old one is gradually phased out.

- Leverage Containerization: As new microservices are built, they are deployed in containers managed by an orchestration platform like Kubernetes, ensuring scalability and resilience from day one.

- Implement a Hybrid Integration Platform (HIP): Use a HIP to manage the complex data flows and API calls between the new cloud-native services and the on-premise legacy core, ensuring seamless operation during the multi-year transition.

This blended approach transforms modernization from a monolithic, high-risk project into a manageable, iterative program that delivers continuous value.

Actionable Next Steps: From Theory to Reality

Moving forward requires a structured plan. The insights gained from understanding these legacy system modernization approaches are only valuable when put into action. Here’s how to begin:

- Conduct a Portfolio Assessment: Catalog your existing systems. Evaluate them based on business value, technical health, operational cost, and associated risks. This creates a clear heat map of where to focus your efforts first.

- Define Clear Business Objectives: What do you want to achieve? Is it cost reduction, improved agility, better customer experience, or access to advanced analytics? Your "why" will dictate your "how."

- Build a Cross-Functional Team: Modernization is not just an IT project. Involve stakeholders from business, finance, and operations from the very beginning to ensure alignment and build support for the initiative.

- Start with a Pilot Project: Choose a low-risk, high-impact candidate for your first modernization effort. A successful pilot builds momentum, provides invaluable learning experiences, and demonstrates the value of the investment to the wider organization.

Ultimately, mastering these legacy system modernization approaches is about transforming technology from a constraint into an enabler. It’s about future-proofing your organization, empowering your development teams with modern tools, and creating a flexible, scalable foundation to support innovation. The path you choose will define your company's ability to compete and thrive in a constantly evolving digital world.

As you embark on modernizing systems that rely on specialized protocols, finding the right infrastructure is paramount. For teams working with Model Context Protocol (MCP) servers, FindMCPServers provides a curated, searchable directory of high-performance hosting solutions. Ensure your modernized architecture is built on a reliable foundation by exploring your options at FindMCPServers today.