Building a machine learning model is just the first step; deploying, monitoring, and maintaining it in production is where the real challenge begins. This is where MLOps and robust machine learning pipelines become critical. A well-structured pipeline automates everything from data ingestion and preprocessing to model training, validation, and deployment, ensuring reproducibility, scalability, and efficiency.

However, the ecosystem of machine learning pipeline tools is vast and complex, ranging from fully managed cloud services to flexible open-source orchestrators. Choosing the wrong tool can lead to vendor lock-in, operational overhead, and stalled projects. This guide cuts through the noise to help you make an informed decision.

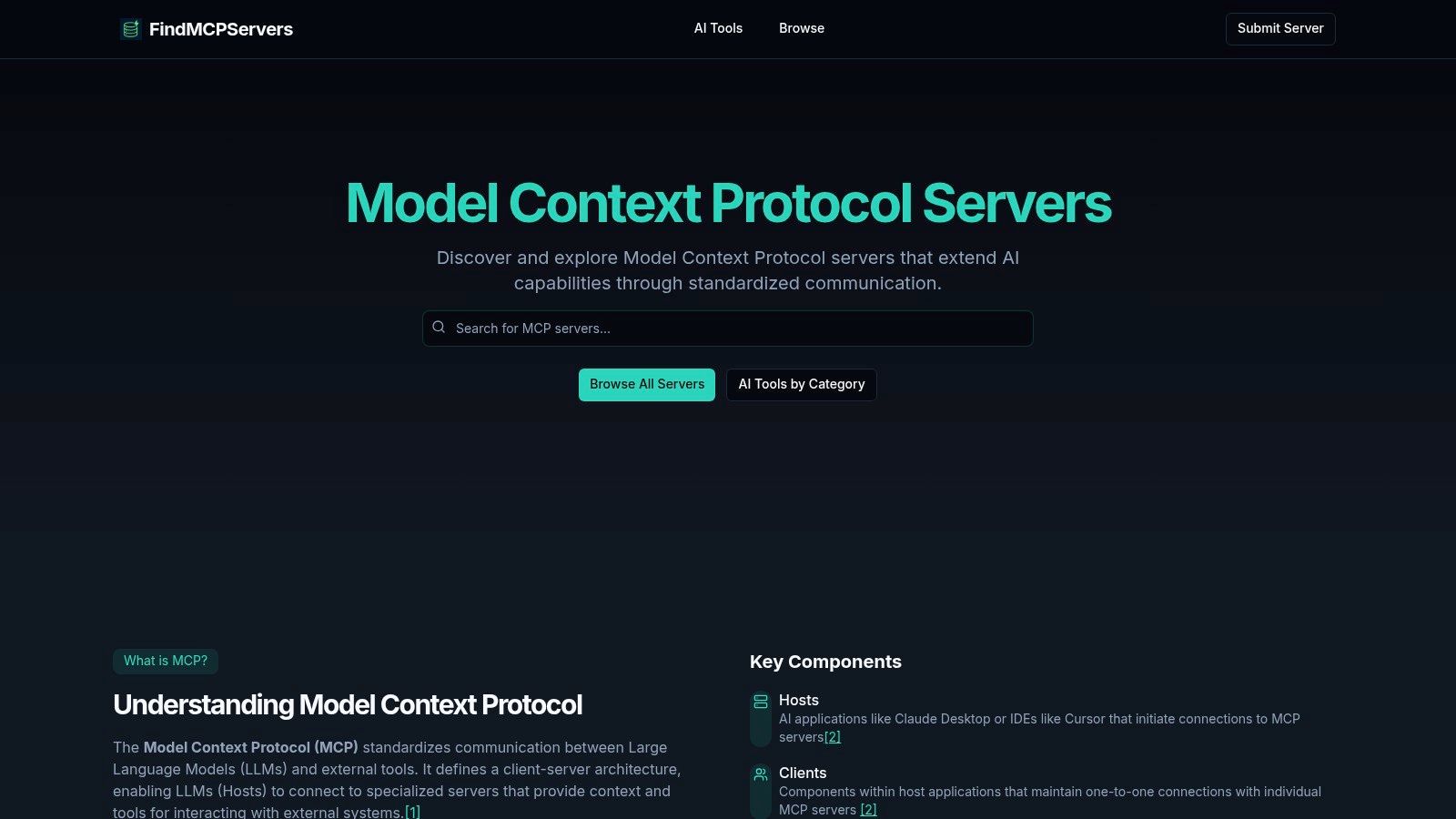

We will provide an in-depth analysis of the top 12 tools for 2025, complete with practical examples of how they solve real-world problems. For each option, you'll find direct links and screenshots to give you a clear view of its capabilities. We'll also explore how modern frameworks like the Model Context Protocol (MCP) are changing the game by standardizing how AI models interact with external systems, a crucial consideration for any forward-thinking MLOps strategy. Our goal is to provide a straightforward comparison, so you can select the best platform for your specific needs, whether you're a data scientist, AI developer, or tech entrepreneur.

1. FindMCPServers

FindMCPServers emerges as a definitive resource hub for developers building sophisticated machine learning pipelines. It uniquely addresses a critical challenge in modern AI: the seamless, standardized integration of Large Language Models (LLMs) with external tools and data sources. By centralizing resources around the Model Context Protocol (MCP), it provides a powerful framework for constructing more dynamic and capable AI systems. Instead of treating data ingestion, processing, and model interaction as separate, often incompatible stages, FindMCPServers champions a unified approach where LLMs can directly and securely interact with databases, APIs, and even live web browsers.

This platform isn't just a list; it's a structured ecosystem that empowers developers to discover and implement pre-built or custom MCP servers. This fundamentally enhances what machine learning pipeline tools can achieve by enabling models to perform actions and retrieve real-time information, moving beyond static, pre-trained knowledge.

Key Strengths and Use Cases

The primary strength of FindMCPServers lies in its strict adherence to the MCP standard, which simplifies complex integrations that would otherwise require extensive custom code. This standardization is crucial for building scalable and maintainable AI applications.

Practical Use Cases:

- Automated Data Analysis: A developer could configure an LLM-powered agent to use an MCP server for SQL databases. The agent can then autonomously query sales data, use a code execution server to perform statistical analysis with Python libraries, and finally use a browser automation server to compile the findings into a report on a web-based dashboard.

- Multi-Agent Collaboration: An AI system designed for market research can deploy multiple= agents. One agent uses a web search MCP server to gather industry news, another queries internal financial databases via a dedicated server, and a third agent synthesizes the information from both to generate a competitive analysis report.

- Dynamic Application Control: An application can use an API integration server to allow an LLM to control other software services. For example, a customer service bot could directly access a CRM's API to update a user's contact information or retrieve their order history in real-time.

Platform Offerings

FindMCPServers organizes its resources into clear, functional categories, making it easy to find the right tool for any stage of your pipeline.

- Categorized Directory: Servers are logically grouped by function, including Database Querying, Web & Vector Search, Code Execution, API Integration, and Browser Automation.

- Security Focus: The platform emphasizes servers with robust security, including access controls= and detailed audit logs, which are essential when granting AI models access to sensitive systems.

- Community and Updates: It fosters an active community, providing updates on new servers and protocol enhancements, ensuring developers are using the latest and most effective tools.

Website: https://www.findmcpservers.com

Pros & Cons

| Pros | Cons |

|---|---|

| Standardized Integration: Simplifies connecting LLMs to external tools via the Model Context Protocol. | Learning Curve: Assumes some user familiarity with MCP and advanced AI development concepts. |

| Broad Tool Directory: Comprehensive, categorized list of servers for diverse machine learning pipeline needs. | Resource-Focused: Primarily a discovery hub, so pricing information for individual servers is not listed. |

| Advanced Workflow Support: Enables multi-agent systems, real-time data access, and complex task execution. | |

| Strong Security Emphasis: Highlights servers with crucial features like access control and audit logs. | |

| Active Community: Keeps users informed about the latest server releases and protocol improvements. |

2. Amazon SageMaker (Pipelines)

For teams deeply integrated within the AWS ecosystem, Amazon SageMaker Pipelines offers a powerful, fully managed solution for building, automating, and scaling end-to-end machine learning workflows. It stands out by providing a serverless orchestration service that connects every step of the ML lifecycle, from data preparation with SageMaker Data Wrangler to model training, evaluation, and deployment, all within the cohesive SageMaker Studio IDE. This tight integration is its primary differentiator, eliminating the friction of connecting disparate services.

Unlike standalone orchestrators, SageMaker Pipelines automatically tracks the lineage of all artifacts, such as datasets, code, and model versions. This provides full reproducibility and auditability, which is critical for enterprise governance. The platform offers both a visual, drag-and-drop pipeline builder for rapid prototyping and a Python SDK for programmatic, code-first definitions, catering to different user preferences. For those focused on a robust MLOps strategy, understanding the nuances of its deployment capabilities is key; you can find more details in our complete guide to machine learning model deployment on findmcpservers.com.

Key Features & Considerations

A key practical benefit is the ability to reuse pipeline steps across different projects, which accelerates development. For example, a data validation step defined in one pipeline can be easily imported and used in another, promoting consistency and reducing redundant code. For a retail company, this could mean a single, standardized "customer data cleaning" step is used in pipelines for both sales forecasting and customer churn prediction models, ensuring consistent data handling.

| Feature | Description |

|---|---|

| Deep AWS Integration | Natively connects with S3, IAM, CloudWatch, and other AWS services for a seamless workflow. |

| Serverless Orchestration | You only pay for the underlying compute resources used by each pipeline step (e.g., training jobs, processing jobs). |

| Model Registry | A central repository to version, manage, and approve models for production deployment. |

| CI/CD Templates | Provides built-in templates to integrate pipelines with CI/CD tools like AWS CodePipeline for MLOps. |

Pros:

- Scalability: Leverages the full power of AWS for handling massive datasets and complex training jobs.

- Managed Service: No need to manage or provision orchestration servers, reducing operational overhead.

- Strong Governance: Automatic lineage tracking simplifies auditing and model reproducibility.

Cons:

- Vendor Lock-in: Designed for an AWS-centric environment; portability to other clouds is challenging.

- Cost Complexity: Pricing is based on a collection of underlying AWS service charges, which can be difficult to predict and track.

Website: aws.amazon.com/sagemaker/pipelines

3. Google Cloud Vertex AI (Pipelines)

For organizations building on Google Cloud Platform, Vertex AI Pipelines provides a serverless, managed orchestration service designed to automate and scale ML workflows. It distinguishes itself by natively supporting both the open-source Kubeflow Pipelines (KFP) and TensorFlow Extended (TFX) SDKs, offering a familiar entry point for teams already versed in these ecosystems. This allows developers to define portable, component-based pipelines as code and run them without managing the underlying Kubernetes infrastructure, all within the integrated Vertex AI environment.

Unlike generic orchestrators, Vertex AI Pipelines is deeply woven into the GCP fabric, enabling seamless, low-latency connections to services like BigQuery, Cloud Storage, and Dataflow. It automatically logs metadata and tracks artifacts for every pipeline run, ensuring full lineage and reproducibility for governance and debugging. The platform provides a unified UI to visualize pipeline graphs, compare run metrics, and trace artifacts, making it one of the more user-friendly machine learning pipeline tools for teams committed to Google Cloud.

Key Features & Considerations

A significant practical advantage is its component-based architecture. A team can create a reusable KFP component for feature engineering with BigQuery, package it, and share it across multiple= projects. For example, an e-commerce company could build a "user purchase history featureizer" component. This component can then be used in a product recommendation pipeline, a customer lifetime value pipeline, and a fraud detection pipeline, ensuring the core business logic is consistent and maintained in one place.

| Feature | Description |

|---|---|

| KFP & TFX Native Support | Define pipelines using the familiar Kubeflow Pipelines v2 or TFX SDKs for a smooth transition. |

| Serverless Execution | Pay-per-use pricing model where you are billed for the compute resources consumed by each pipeline step. |

| Integrated ML Metadata | Automatically captures and stores metadata about pipeline runs, artifacts, and executions for analysis. |

| Vertex AI Integration | Natively connects with Vertex AI Training, Prediction, and Feature Store for a unified MLOps workflow. |

Pros:

- Strong Open-Source Alignment: Easy adoption for teams with existing Kubeflow or TFX expertise.

- Excellent Observability: The UI provides powerful tools to visualize, compare, and debug pipeline runs.

- Managed and Secure: Runs in a secure, serverless environment with fine-grained IAM controls, reducing operational burden.

Cons:

- GCP-Centric: Optimized for the Google Cloud ecosystem; integrating with external or on-premise resources requires more effort.

- Learning Curve: While familiar for KFP users, newcomers may need time to grasp the component-based authoring paradigm.

Website: cloud.google.com/vertex-ai/docs/pipelines

4. Microsoft Azure Machine Learning (Pipelines)

For organizations heavily invested in the Microsoft Azure cloud, Azure Machine Learning offers a comprehensive, collaborative platform for building and operationalizing ML workflows. It provides a structured environment where teams can create, publish, and manage reproducible machine learning pipelines. Its primary differentiator lies in its deep integration with the broader Azure ecosystem and its strong emphasis on enterprise-grade governance, security, and reusability through shared registries.

Unlike some standalone machine learning pipeline tools, Azure ML is designed as a complete MLOps solution. It allows you to define pipelines using a YAML-based interface or a Python SDK (v2), catering to both declarative and programmatic approaches. A standout feature is the Azure ML Registry, which enables teams to share and reuse components, models, and entire pipelines across different projects and workspaces, significantly improving efficiency and consistency. For engineers aiming to embed AI into applications, understanding how these pipelines connect to deployment targets is crucial; our guide on leveraging machine learning for business growth on findmcpservers.com explores this integration further.

Key Features & Considerations

A practical benefit is the ability to trigger pipelines based on data changes or on a schedule, enabling true CI/CD for machine learning. For instance, a pipeline can be configured to automatically retrain a model whenever a new weekly dataset is uploaded to Azure Blob Storage. A hospital could use this to retrain a patient readmission prediction model weekly, ensuring the model always reflects the latest patient data without manual intervention.

| Feature | Description |

|---|---|

| Deep Azure Integration | Natively connects with Azure Kubernetes Service (AKS), Azure Data Factory, and Azure Key Vault. |

| Component-Based Pipelines | Encourages creating reusable, self-contained steps (components) that can be shared across pipelines. |

| Centralized Registry | Allows sharing and discovery of components, models, and environments across multiple= teams and workspaces. |

| Enterprise Governance | Provides robust security, access control, and cost management features integrated with Azure policies. |

Pros:

- Strong MLOps Support: Excellent tooling for CI/CD, model monitoring, and automated retraining.

- Scalability & Security: Leverages Azure's global infrastructure for secure and scalable computations.

- Hybrid Workflow: Supports both a designer UI for low-code pipeline building and a code-first SDK.

Cons:

- Vendor Lock-in: Tightly coupled with the Azure ecosystem, making it difficult to migrate to other clouds.

- Pricing Complexity: Costs are an aggregate of multiple= Azure services (compute, storage, etc.), which can be hard to forecast.

- SDK Transition: Teams may need to migrate legacy pipelines from the older Python SDK v1 to the newer v2.

Website: azure.microsoft.com/products/machine-learning/

5. Databricks Machine Learning (managed MLflow)

For organizations building on a data lakehouse architecture, Databricks Machine Learning provides a unified platform where data engineering, analytics, and machine learning converge. Its core strength lies in offering a fully managed and deeply integrated version of MLflow, eliminating the operational burden of hosting it yourself. This allows teams to seamlessly transition from large-scale data processing with Spark to building, training, and deploying models within a single, collaborative environment governed by the Unity Catalog.

Unlike siloed tools, Databricks connects every stage of the MLOps lifecycle directly to the underlying data. This unique integration simplifies governance and reproducibility, as both data and model lineage are tracked centrally. For teams already managing their data assets within Databricks, understanding how to structure this data is the first step; our guide on how to create tables in Databricks on findmcpservers.com offers a practical starting point. The platform's native support for MLflow Pipelines and new generative AI features makes it a comprehensive solution for modern machine learning pipeline tools.

Key Features & Considerations

A practical advantage is the platform's collaborative nature. A data engineer can create a feature table using a notebook, and a data scientist can immediately access that versioned table to start an MLflow experiment, all within the same workspace and with shared access controls. For example, a data engineer can build a "daily user activity summary" table. A data scientist can then immediately use that table to train a model that predicts which users are likely to churn, drastically shortening the time from data preparation to modeling.

| Feature | Description |

|---|---|

| Unified Governance | Unity Catalog provides a single governance layer for all data and AI assets, including models and features. |

| Managed MLflow | Enterprise-ready MLflow with experiment tracking, model registry, and deployment without server management. |

| Integrated Workflows | Natively connects data processing (Spark), model development (Notebooks), and orchestration (Workflows). |

| Generative AI Tooling | Includes features for LLM evaluation, monitoring, and fine-tuning, directly within the platform. |

Pros:

- Data & ML Unification: Eliminates the gap between data preparation and machine learning, simplifying lineage and access.

- Mature Ecosystem: Leverages the robust, open-source MLflow project with extensive community support and documentation.

- Scalability: Built on top of Apache Spark for processing massive datasets and distributing training workloads.

Cons:

- Pricing Complexity: Usage-based pricing across compute, storage, and platform features can become costly at scale.

- Platform-Centric: While built on open-source, it delivers maximum value when the entire workflow is within the Databricks ecosystem.

Website: www.databricks.com/product/machine-learning

6. Kubeflow (Kubeflow Pipelines)

For organizations seeking an open-source, Kubernetes-native solution, Kubeflow Pipelines provides a powerful framework for building portable and scalable machine learning workflows. It is designed to run on any Kubernetes cluster, offering unparalleled flexibility for teams that require full control over their infrastructure, whether on-premise or across multiple= cloud providers. Its key differentiator is its container-first approach, where every step in a pipeline is a containerized component, ensuring consistency and reproducibility from development to production.

Unlike fully managed services, Kubeflow empowers teams with a comprehensive, self-hosted ML platform. It leverages popular CNCF projects like Argo Workflows for orchestration, providing a robust foundation for defining complex Directed Acyclic Graphs (DAGs). The platform includes a user-friendly UI for managing and visualizing pipeline runs, alongside a Python SDK for defining workflows programmatically. This dual interface caters to both data scientists who prefer a visual overview and MLOps engineers who rely on code-based definitions for CI/CD integration.

Key Features & Considerations

A significant practical advantage is the reusability of pipeline components. For instance, a data transformation component built for one project can be packaged as a container and easily shared. A practical example would be creating a containerized component that resizes and normalizes images for a computer vision model. This same component can then be reused in pipelines for image classification, object detection, and image segmentation, ensuring identical preprocessing is applied everywhere.

| Feature | Description |

|---|---|

| Kubernetes-Native | Runs on any Kubernetes cluster, offering portability across cloud and on-premise environments. |

| Reusable Components | Pipeline steps are self-contained container images that can be shared and reused across projects. |

| Python SDK | Define, compile (to YAML), and run pipelines programmatically for easy version control and automation. |

| Integrated MLOps Stack | Part of the broader Kubeflow ecosystem, which includes tools for serving (KServe) and hyperparameter tuning (Katib). |

Pros:

- No Vendor Lock-in: Open-source and portable, providing freedom to choose your cloud or on-premise infrastructure.

- Full Control & Customization: Offers complete control over the environment, ideal for specific security or compliance needs.

- Active Community: Backed by a strong open-source community, ensuring continuous development and support.

Cons:

- High Operational Overhead: Requires significant Kubernetes expertise to install, configure, and maintain the platform.

- Steep Learning Curve: Can be complex to set up compared to managed cloud-based machine learning pipeline tools.

Website: www.kubeflow.org

7. TensorFlow Extended (TFX)

For organizations building production-grade ML systems with TensorFlow, TensorFlow Extended (TFX) provides a powerful, open-source platform straight from Google. It is not a standalone orchestrator but a library of standardized components that define a complete machine learning pipeline. TFX distinguishes itself by offering a battle-tested, prescriptive framework for implementing MLOps, designed to run on various orchestrators like Apache Airflow, Kubeflow Pipelines, and Vertex AI Pipelines. This portability is a core strength, allowing teams to develop pipelines locally and deploy them to different production environments without code changes.

Unlike more general-purpose tools, TFX is deeply integrated with the TensorFlow ecosystem. It leverages ML Metadata (MLMD) to automatically track artifacts, executions, and their relationships, ensuring full lineage and reproducibility. This makes debugging and auditing complex pipelines significantly easier. The framework provides a clear, component-based model that covers the entire ML lifecycle, offering a robust foundation for teams looking for reliable machine learning pipeline tools that scale.

Key Features & Considerations

A key practical benefit is the set of pre-built, production-ready components. For example, the ExampleGen component standardizes data ingestion, while Transform performs feature engineering at scale, and Evaluator provides deep model analysis. A team building a sentiment analysis model could use the TensorFlow Data Validation (TFDV) component to automatically detect anomalies in incoming text data, such as a sudden shift in vocabulary, preventing the model from training on corrupted data.

| Feature | Description |

|---|---|

| Standardized Components | Pre-built components for data ingestion, validation, transformation, training, evaluation, and serving. |

| Orchestrator Agnostic | Pipelines can be defined once and run on different orchestrators like Kubeflow, Airflow, or Vertex AI. |

| ML Metadata (MLMD) | Automatically tracks artifacts and executions to provide lineage, debuggability, and reproducibility. |

| TensorFlow Ecosystem | Deep integration with tools like TensorFlow Data Validation (TFDV) and TensorFlow Model Analysis (TFMA). |

Pros:

- Production-Ready: Proven in Google's large-scale production environments for TensorFlow-based models.

- Component Model: The standardized component structure promotes reusability and best practices.

- Portable and Flexible: Avoids lock-in to a specific orchestrator or cloud provider.

Cons:

- TensorFlow-Centric: While adaptable, it is heavily optimized for TensorFlow; using other frameworks requires significant custom work.

- Requires External Orchestrator: TFX defines the pipeline but relies on an external system (like Airflow) for execution and scheduling.

Website: www.tensorflow.org/tfx

8. Prefect (Cloud and Open Source)

Prefect offers a Python-native approach to workflow orchestration, positioning itself as a developer-friendly tool for building, observing, and reacting to data and ML pipelines. Its primary differentiator is its emphasis on dynamic, code-first workflows that feel intuitive to Python developers. Rather than forcing ML logic into rigid DAG structures, Prefect allows for native Python code, including conditional logic, loops, and dynamic task generation, making it one of the more flexible machine learning pipeline tools available.

Unlike fully integrated platforms, Prefect focuses purely on orchestration, giving teams the freedom to choose their own tools for data storage, training, and serving. This hybrid model, where the control plane is managed by Prefect Cloud while execution agents run on your infrastructure, provides a balance of convenience and security. The platform's observability UI is a standout feature, offering deep insights into pipeline runs, logs, and scheduling. For a broader view of how it fits into the ecosystem, explore our comparison of AI workflow automation tools on findmcpservers.com.

Key Features & Considerations

A key practical benefit is Prefect's automatic retry and caching logic, which can be enabled with simple decorators. For example, you can decorate a data-fetching task from a flaky third-party API with @task(retries=3, retry_delay_seconds=10). This tells Prefect to automatically retry the task up to three times, waiting 10 seconds between attempts, to handle transient network errors without writing complex boilerplate code.

| Feature | Description |

|---|---|

| Pythonic API | Define pipelines (Flows) and steps (Tasks) using simple Python functions and decorators. |

| Observability UI | A rich cloud-based dashboard for monitoring runs, viewing logs, and managing event-driven automations. |

| Hybrid Execution Model | The orchestration engine is managed in the cloud, while code execution happens securely on your own infrastructure. |

| Dynamic Workflows | Natively supports conditional paths, loops, and tasks generated at runtime, ideal for complex ML logic. |

Pros:

- Excellent Developer Experience: The Python-native approach significantly lowers the barrier to entry and speeds up development.

- Flexible Deployment: Run workflows anywhere: locally, on-premises, in Kubernetes, or on serverless platforms.

- Transparent Pricing: Offers a generous free tier and clear, usage-based pricing for its cloud service.

Cons:

- Orchestration Only: Does not include built-in components like a feature store or model serving, requiring integration with other tools.

- Advanced Features Gated: Enterprise-grade features like SSO and advanced RBAC are limited to higher-priced tiers.

Website: www.prefect.io

9. Dagster (Dagster Plus and Open Source)

Dagster approaches orchestration from a unique, asset-centric perspective, making it one of the more developer-friendly machine learning pipeline tools for teams managing complex data ecosystems. Instead of focusing only on tasks, Dagster models pipelines as a graph of versioned, reusable assets like datasets, files, and machine learning models. This fundamental shift provides a declarative framework where the primary goal is producing and maintaining high-quality data assets, with exceptional observability and lineage tracking built-in.

Unlike traditional orchestrators that can become difficult to debug, Dagster's UI provides a unified view of asset health, run history, and data lineage, making it easier to trace issues from a failing model back to its source data. It offers both a fully managed cloud version, Dagster Plus, for rapid deployment and a flexible open-source option. This dual offering allows teams to start quickly in the cloud and migrate to a self-hosted environment if needed, providing a clear path for scaling operations.

Key Features & Considerations

A key advantage is its integrated testing and data quality checks. For instance, you can define a data quality check directly on a feature set asset; if the validation fails, Dagster can automatically prevent downstream model training assets from materializing. In practice, you could define an asset representing "clean_customer_data" with a rule that "age must be between 18 and 100". If a new data load contains invalid ages, the pipeline automatically halts before the model training step, preventing bad data from corrupting the model.

| Feature | Description |

|---|---|

| Asset-Based Modeling | Defines pipelines as graphs of data assets, providing strong lineage, cataloging, and quality checks. |

| Unified Observability | Combines orchestration with a metadata platform for deep insights into asset health and pipeline runs. |

| Flexible Deployment | Available as a managed service (Dagster Plus) or a powerful open-source framework for self-hosting. |

| Integrated Testing & Quality | Enables defining data quality rules and tests directly within the asset definitions. |

Pros:

- Excellent Observability: Asset-first approach provides superior lineage and debugging for complex data and ML stacks.

- Developer-Friendly: Strong focus on local development, testing, and a code-first philosophy.

- Rapid Setup: The managed Dagster Plus offering allows teams to get started quickly with transparent pricing.

Cons:

- Learning Curve: The asset-centric mental model requires a shift from traditional task-based orchestrators.

- Cost at Scale: The managed plan's pricing can become significant for organizations with a large number of assets and frequent runs.

Website: dagster.io

10. Flyte (Open Source) + Union Cloud (Managed Flyte)

Originally developed at Lyft, Flyte is a Kubernetes-native workflow automation platform purpose-built for scalable and reproducible machine learning and data pipelines. It differentiates itself with a strong emphasis on type safety and containerization, ensuring that every task in a workflow is a strongly-typed function that runs in its own isolated environment. This design choice guarantees reproducibility and simplifies debugging, as dependencies for one step cannot interfere with another. Flyte offers both a powerful open-source version and a managed SaaS offering called Union Cloud.

Unlike many other machine learning pipeline tools that are Python-centric, Flyte provides first-class SDKs for Python, Java, and Scala, making it adaptable for diverse engineering teams. Workflows are defined as code, versioned in Git, and can be visualized and managed through a central UI. The managed Union Cloud offering removes the significant operational overhead of maintaining a Kubernetes cluster, allowing teams to focus purely on building their ML pipelines without deep infrastructure expertise. This dual-offering approach makes Flyte accessible to both startups and large enterprises.

Key Features & Considerations

A key practical benefit of Flyte is its automatic caching of task outputs. If a task is re-run with the exact same inputs and code version, Flyte reuses the previous result instead of re-computing it. For a pipeline that processes terabytes of raw data in an initial step, this feature can save hours of compute time and significant cost during iterative development, as the expensive data processing step only runs once.

| Feature | Description |

|---|---|

| Kubernetes-Native Execution | Leverages Kubernetes for container orchestration, providing scalability and resource isolation. |

| Type-Safe SDKs | Enforces data types between pipeline tasks at compile time, reducing runtime errors. |

| Multi-Language Support | First-class SDKs in Python, Java, and Scala to accommodate different team skillsets. |

| Managed & Open-Source Options | Union Cloud provides a fully managed SaaS experience, while the open-source version offers full control. |

Pros:

- Strong Reproducibility: Containerization and type safety ensure pipelines are highly reproducible and auditable.

- Proven Scalability: Battle-tested at large-scale companies like Lyft, capable of handling complex concurrent workflows.

- Flexible Deployment: Can be self-hosted for maximum control or used as a managed service to reduce operational burden.

Cons:

- Kubernetes Expertise Required: The open-source version has a steep learning curve for teams unfamiliar with Kubernetes.

- Enterprise-Oriented Pricing: The managed Union Cloud pricing can be a significant investment for smaller teams or individual developers.

Website: flyte.org

11. Dataiku (Universal AI Platform)

Dataiku positions itself as an end-to-end, collaborative platform designed to bridge the gap between data teams and business stakeholders. It provides a unified environment where users can design, deploy, and manage data pipelines and analytics applications. Its core differentiator is its hybrid approach, offering both a visual, flow-based interface for low-code users and extensive code-based environments (Python, R, SQL) for data scientists and engineers, all within the same project. This makes it one of the more versatile machine learning pipeline tools for organizations with diverse skill sets.

Unlike tools focused purely on orchestration, Dataiku emphasizes governance and collaboration across the entire ML lifecycle. It centralizes everything from data preparation and feature engineering to model training, deployment, and monitoring. This holistic view enables robust MLOps practices, including automated model retraining triggers based on performance degradation or data drift detection. The platform is available as a self-hosted solution or as a managed cloud service, providing flexibility for different enterprise IT strategies.

Key Features & Considerations

A key practical benefit is the ability to create reusable "recipes" for data transformation. For example, a business analyst can visually build a data-cleaning flow that joins customer data from a CRM with sales data from a database. A data scientist can then programmatically incorporate this entire flow as the first step in a complex model training pipeline, ensuring the business logic defined by the analyst is consistently applied without rewriting code.

| Feature | Description |

|---|---|

| Visual & Code-First Workflows | A visual flow designer coexists with code notebooks and custom scripts, supporting both technical and non-technical users. |

| Model Registry & Evaluation Store | Centralizes versioned models, their performance metrics, and interpretability reports for clear governance. |

| Drift Detection & Governance | Built-in monitoring for data and concept drift, with automated actions and governance frameworks for regulated industries. |

| Broad Platform Integrations | Natively connects with major cloud platforms (AWS, Azure, GCP) and data warehouses like Snowflake and Databricks. |

Pros:

- Strong Collaboration: Unifies data scientists, analysts, and business teams on a single platform, improving project velocity.

- Enterprise Governance: Excellent for organizations requiring strict compliance, with features for auditability and lineage tracking.

- Flexibility: Supports a wide range of data sources and computational frameworks, preventing vendor lock-in to a single ecosystem.

Cons:

- High Cost at Scale: Paid enterprise editions can be a significant investment, as pricing information requires direct sales contact.

- Learning Curve: The breadth of features means new users may face a steep learning curve to master advanced functionalities.

Website: www.dataiku.com

12. AWS Marketplace (ML & MLOps)

While not a singular tool, the AWS Marketplace serves as a critical procurement hub for discovering and deploying a vast array of machine learning pipeline tools and MLOps solutions. It acts as a curated catalog where teams can find, purchase, and launch everything from complete ML platforms like Dataiku to specialized algorithms and pre-trained models compatible with SageMaker. Its primary differentiator is the streamlined procurement process, allowing organizations to leverage existing AWS billing and agreements to quickly acquire and deploy third-party software without lengthy vendor negotiations.

Unlike direct vendor engagement, the Marketplace centralizes software management and deployment within the familiar AWS console. This simplifies governance and cost tracking by consolidating all charges onto a single AWS bill. Users can filter specifically for ML and MLOps categories to find solutions that fit their stack, whether it's a containerized model for a specific task or professional services from an AWS partner to implement a CI/CD pipeline. This makes it an essential resource for teams looking to augment their AWS environment with specialized, pre-vetted capabilities.

Key Features & Considerations

A key practical benefit is the ability to trial software with minimal commitment. For example, a team can deploy a third-party data labeling tool for a pilot project using a pay-as-you-go subscription. They can use it for a week to label a sample dataset, and if it proves valuable, convert it to a long-term contract directly through the Marketplace, all integrated with their AWS account. If not, they can terminate the subscription with no further commitment.

| Feature | Description |

|---|---|

| One-Click Procurement | Simplifies purchasing by integrating with existing AWS accounts and consolidated billing. |

| Diverse Software & Services | Offers a wide range of listings, including algorithms, model packages, full platforms, and expert services. |

| Flexible Pricing Models | Supports various models like free trials, pay-as-you-go (PAYG), and private offers from vendors. |

| Category-Specific Filtering | Dedicated categories for "Machine Learning" and "DevOps" help users quickly find relevant tools. |

Pros:

- Fast Procurement: Dramatically simplifies and accelerates the purchasing process for teams already on AWS.

- Broad Selection: Provides access to a diverse ecosystem of both established and niche ML pipeline tools.

- Centralized Billing: Consolidates software costs into a single AWS invoice for easier budget management.

Cons:

- Variable Quality: As a marketplace, the quality and support level vary significantly between vendors, requiring careful due diligence.

- Regional Availability: Some software listings and pricing models are region-specific and may not be available globally.

Website: aws.amazon.com/marketplace/

Machine Learning Pipeline Tools Comparison

| Platform | Core Features | User Experience / Quality Metrics | Value Proposition | Target Audience | Price Points / Notes |

|---|---|---|---|---|---|

| FindMCPServers | MCP-based client-server architecture; categorized AI tool servers (DB, search, code, API, browser automation) | Robust security with audit logs; active community engagement | Simplifies LLM integration with external tools; supports multi-agent workflows | AI developers, data scientists, engineers | No clear pricing; resource and discovery hub |

| Amazon SageMaker (Pipelines) | Serverless ML pipeline orchestration; AWS ecosystem integration | Visual pipeline editor; execution tracking; model registry | Enterprise-scale AWS-based ML workflow orchestration | Teams using AWS cloud stack | Pay for underlying jobs; complex cost visibility |

| Google Cloud Vertex AI (Pipelines) | Managed Kubeflow pipelines with BigQuery & Vertex AI integration | Strong observability; fine-grained IAM controls= | Simplifies ML pipelines with GCP services and governance | Kubeflow, TFX users on GCP | Cloud pricing; mostly GCP-optimized |

| Microsoft Azure ML (Pipelines) | Pipeline authoring/versioning; REST endpoints; integrated CI/CD | Enterprise governance; cost management tools | Full Azure integration for reproducible pipelines | Enterprises using Azure | Complex pricing; migration from SDK v1 needed |

| Databricks ML (managed MLflow) | Managed MLflow for tracking, registry, serving; Unity Catalog integration | Mature ecosystem; unified data & ML governance | Combines data engineering and ML with governance | Data scientists, enterprises using lakehouse | Usage-based pricing; can be costly |

| Kubeflow (Kubeflow Pipelines) | Kubernetes-native, reusable, containerized pipelines; Argo integration | Open-source with UI and SDK; active community | Full control and customization on Kubernetes | Teams needing self-hosted solutions | Free open= source; requires Kubernetes expertise |

| TensorFlow Extended (TFX) | Prebuilt TensorFlow pipeline components; metadata tracking | Production-ready; strong docs; community support | Scalable pipelines focused on TensorFlow workloads | TensorFlow developers | Open source; needs external orchestration |

| Prefect (Cloud & Open Source) | Pythonic workflows; retry, caching, scheduling; cloud UI | Fast onboarding; clear SaaS pricing with free tier | Flexible hybrid execution for data and ML workflows | Python developers, small to mid teams | Free tier available; SaaS pricing clear |

| Dagster (Dagster Plus & OSS) | Asset-based modeling; lineage, testing, metadata management | Excellent observability; managed cloud option | Strong ELT and ML orchestration with governance | Data and ML teams; enterprises | Managed plan can be costly |

| Flyte (Open Source + Union Cloud) | Kubernetes-native, scalable workflows; multi-language SDKs | Proven at scale; managed SaaS option for ease | Scalable, type-safe ML/data pipelines with SaaS | Teams needing scalable workflows | OSS free; managed SaaS pricey for enterprises |

| Dataiku (Universal AI Platform) | Visual & code workflows; model registry; drift detection | Strong collaboration; enterprise governance | End-to-end AI platform with broad integrations | Data science & business teams | Paid editions require sales; costly at scale |

| AWS Marketplace (ML & MLOps) | Curated ML and MLOps software/services; one-click purchase | Broad selection; integrated billing | Fast procurement of ML tools within AWS ecosystem | AWS users needing diverse ML tools | Pricing varies per vendor; US-centric |

Choosing Your MLOps Foundation for Future Success

Navigating the landscape of machine learning pipeline tools can feel overwhelming, but making an informed choice is a cornerstone of a successful MLOps strategy. Throughout this article, we've explored a diverse set of powerful solutions, from the deeply integrated, managed services of major cloud providers to the flexible, community-driven powerhouses of the open-source world. The journey from a manual, script-based workflow to a fully automated, reproducible pipeline is a significant leap in maturity for any data science team.

The central theme is clear: there is no single "best" tool. The ideal choice is a direct reflection of your team's unique context. A startup with a small, agile team might prioritize the rapid development and managed infrastructure of Amazon SageMaker or Vertex AI. Conversely, a large enterprise with a dedicated MLOps team and a commitment to a multi-cloud or on-premise strategy might gravitate towards the unparalleled control and customization offered by Kubeflow or Flyte.

Key Takeaways and Decision Framework

As you move forward, the decision-making process shouldn't be about finding a tool with the longest feature list. Instead, it should be a strategic evaluation of how a tool aligns with your operational realities. Your goal is to select a foundation that not only solves today's problems but also provides the flexibility to adapt to tomorrow's challenges.

Consider these critical decision factors:

- Ecosystem Integration: How well does the tool fit into your existing cloud environment and data stack? If your entire data lake resides in AWS S3 and you use EKS, a tool like Amazon SageMaker Pipelines offers native integrations that will dramatically reduce friction and setup time.

- Team Skillset: What are the core competencies of your team? If your engineers are Kubernetes experts, Kubeflow is a natural fit. If they are primarily Python developers and data scientists, a Python-native framework like Prefect or Dagster will feel more intuitive and lower the barrier to entry.

- Scalability and Complexity: What is the anticipated scale of your ML initiatives? For building complex, multi-stage, and type-safe data and ML workflows at scale, a tool like Flyte is specifically engineered for robustness. For projects that require unifying data engineering and machine learning on a massive scale, Databricks provides a cohesive platform.

- The Rise of Interoperability: A critical trend to watch is the decoupling of model logic from the underlying infrastructure. This is where emerging standards like the Model Context Protocol (MCP) become invaluable. An MCP-compliant model can request external data or services in a standardized way, making it portable across different machine learning pipeline tools. Your pipeline, whether it's built in Azure ML or TFX, simply becomes the orchestrator that delivers the model; the model itself handles its own real-time data needs.

Your Actionable Next Steps

Armed with this information, your next steps are to move from analysis to action. Don't get stuck in decision paralysis.

- Shortlist 2-3 Tools: Based on the criteria above, select the top contenders that align with your environment and team.

- Define a Pilot Project: Choose a real, but non-critical, machine learning workflow. A great candidate is often a model retraining pipeline for an existing, stable model.

- Build a Proof of Concept (PoC): Implement the pilot project pipeline in each of your shortlisted tools. This hands-on experience is the single most effective way to understand a tool's true strengths and weaknesses, its learning curve, and its day-to-day usability.

- Evaluate and Decide: Assess the PoCs based on developer experience, performance, cost, and maintenance overhead. The best choice will become evident through practical application.

Ultimately, the goal of implementing robust machine learning pipeline tools is to empower your team to focus on what truly matters: building innovative models that drive business value. By automating the operational overhead, you create an environment where creativity and experimentation can flourish, ensuring your MLOps foundation is not just a technical solution, but a strategic enabler for future success.

Ready to ensure your models can seamlessly access any data or tool, regardless of the pipeline you choose? Explore how the Model Context Protocol (MCP) can future-proof your AI stack. Discover a growing ecosystem of compliant models and services at FindMCPServers and build AI that is truly interoperable and independent. Visit FindMCPServers to learn more.